Enterprise API adoption has gone beyond

predictions. It has become the ‘coolest’ way of exposing business

functionalities to the outside world. Both your public and private APIs, need

to be protected, monitored and managed. Here we focus on API Security. There

are so many options out there to make someone easily confused. When to select

one over the other is always a question – and you need to deal with it quite

carefully to identify and isolate the tradeoffs.

Security is not an afterthought. It has to be an

integral part of any development project – so as for APIs.

API security has evolved a lot in last five years. The growth of

standards, out there, has been exponential. OAuth is the most widely adopted standard - and almost the de-facto standard for API security

.

OAuth is a result of a community effort to build a common standard based solution for identity delegation. Its design was well fed with pre-OAuth vendor specific protocols like, Google AuthSub, Yahoo BBAuth and Flicker Auth.

The core concept behind OAuth is to generate a short-lived temporary token under the approval of the resource owner and share it with the client who wants to access the resource on behalf of its owner. This is well explained by Eran Hammer, taking a parking valet key as an analogy. Valet key will let a third party to drive, but with restrictions like, only a mile or two. Also you cannot use the valet key to do anything other than driving, like opening the trunk. Likewise the temporary token issued under OAuth can only be used for the purpose its been issued to - not for anything else. If you authorize a third party application to import photos from your Flickr account via OAuth, that application can use the OAuth key for that purpose only. It cannot delete or add new photos. This core concept remains the same from OAuth 1.0 to OAuth 2.0.

What made OAuth 2.0 looks different from OAuth 1.0?

OAuth 1.0 is a standard, built for identity delegation. OAuth 2.0 is a highly extensible authorization framework. The best selling point in OAuth 2.0 is its extensibility by being an authorization framework.

OAuth 1.0 is coupled with signature-based security. Although it has provisions to use different signature algorithms, still it’s signature based. One of the key criticisms against OAuth 1.0 is the burden enforced on OAuth clients for signature calculation and validation. This is not a completely valid argument. This is where we need proper tools to the rescue. Why an application developer needs to worry about signature handling? Delegate that to a third party library and stay calm. If you think OAuth 2.0 is better than OAuth 1.0 because of the simplicity added through OAuth 2.0 Bearer Token profile (against the signature based tokens in 1.0) – you’ve been misled.

Let me reiterate. The biggest advantage of OAuth 2.0 is its extensibility. The core OAuth 2.0 specification is not tightly coupled with a token type. There are several OAuth profiles been discussed under IETF OAuth working group at the moment. The Bearer token profile is already a proposed IETF standard - RFC 6750.

The

Bearer token profile is the mostly used one today for API Security. The access token used under Bearer token profile is a randomly generated string. Anyone who is in possession of this token can use it to access a secured API. In fact, that is what the name implies too. The protection of this token is facilitated through the underlying transport channel via TLS. TLS only provides the security while in transit. It's the responsibility of the OAuth Token Issuer (or the Authorization Server) and the OAuth client to protect the access token while being stored. Most of the cases, access token needs to be encrypted. Also, the token issuer needs to guarantee the randomness of the generated access token - and it has to be long enough to exhaust any brute-force attacks.

OAuth 2.0 has three major phases (To be precise the phase - 1 and phase - 2 could overlap based on the grant type).

1. Requesting an Authorization Grant.

2. Exchanging the Authorization Grant for an Access Token.

3. Access the resources with the Access Token.

OAuth 2.0 core specification does not mandate any access token type. Also the requester or the client cannot decide which token type it needs. It's purely up to the Authorization Server to decide which token type to be returned in the Access Token response - which is the phase 2.

The access token type provides the client the information required to successfully utilize the access token to make a request to the protected resource (along with type-specific attributes). The client must not use an access token if it does not understand the token type.

Each access token type definition specifies the additional attributes (if any) sent to the client together with the "access_token" response parameter. It also defines the HTTP authentication method used to include the access token when making a request to the protected resource.

For example following is what you get for the Access Token response irrespective of which grant type you use (To be precise, if the grant type is client credentials, there won’t be any refresh_token in the response).

HTTP/1.1 200 OK

Content-Type: application/json;charset=UTF-8

Cache-Control: no-store

Pragma: no-cache

{

"access_token":"mF_9.B5f-4.1JqM",

"token_type":"Bearer",

"expires_in":3600,

"refresh_token":"tGzv3JOkF0XG5Qx2TlKWIA"

}

The above is for the Bearer and following is for the MAC.

HTTP/1.1 200 OK

Content-Type: application/json

Cache-Control: no-store

{

"access_token":"SlAV32hkKG",

"token_type":"mac",

"expires_in":3600,

"refresh_token":"8xLOxBtZp8",

"mac_key":"adijq39jdlaska9asud",

"mac_algorithm":"hmac-sha-256"

}

The

MAC Token Profile is very much closer to what we have in OAuth 1.0.

OAuth authorization server will issue a MAC key along with the signature algorithm to be used and an access token that can be used as an identifier for the MAC key. Once the client has access to the MAC key, it can use it to sign a normalized string derived from the request to the resource server. Unlike in Bearer token, the MAC key will never be shared between the client and the resource server. It’s only known to the authorization server and the client. Once the resource server gets the signed message with MAC headers, it has to validate the signature by talking to the authorization server. Under the MAC token profile, TLS is only needed for the first step, during the initial handshake where the client gets the MAC key from the authorization server. Calls to the resource server need not to be on TLS, as we never expose MAC key over the wire.

MAC Access Token response has two additional attributes. mac_key and the mac_algorithm. Let me rephrase this - "Each access token type definition specifies the additional attributes (if any) sent to the client together with the "access_token" response parameter".

The MAC Token Profile defines the HTTP MAC access authentication scheme, providing a method for making authenticated HTTP requests with partial cryptographic verification of the request, covering the HTTP method, request URI, and host. In the above response access_token is the MAC key identifier. Unlike in Bearer, MAC token profile never passes it's top secret over the wire.

The access_token or the MAC key identifier is a string identifying the MAC key used to calculate the request MAC. The string is usually opaque to the client. The server typically assigns a specific scope and lifetime to each set of MAC credentials. The identifier may denote a unique value used to retrieve the authorization information (e.g. from a database), or self-contain the authorization information in a verifiable manner (i.e. a string consisting of some data and a signature).

The mac_key is a shared symmetric secret used as the MAC algorithm key. The server will not reissue a previously issued MAC key and MAC key identifier combination.

Phase-3 will utilize the access token obtained in phase-2 to access the protected resource.

Following shows how the Authorization HTTP header looks like when Bearer Token being used.

Authorization: Bearer mF_9.B5f-4.1JqM

This adds very low overhead on client side. It simply needs to pass the exact access_token it got from the Authorization Server in phase-2.

Under MAC token profile, this is how it looks like.

Authorization: MAC id="h480djs93hd8",

ts="1336363200",

nonce="dj83hs9s",

mac="bhCQXTVyfj5cmA9uKkPFx1zeOXM="

id is the MAC key identifier or the access_token from the phase-2.

ts the request timestamp. The value is a positive integer set by the client when making each request to the number of seconds elapsed from a fixed point in time (e.g. January 1, 1970 00:00:00 GMT). This value is unique across all requests with the same timestamp and MAC key identifier combination.

nonce is a unique string generated by the client. The value is unique across all requests with the same timestamp and MAC key identifier combination.

The client uses the MAC algorithm and the MAC key to calculate the request mac.

Either we use Bearer or MAC - the end user or the resource owner is identified using the access_token. Authorization, throttling, monitoring or any other quality of service operations can be carried out against the access_token irrespective of which token profile you use.

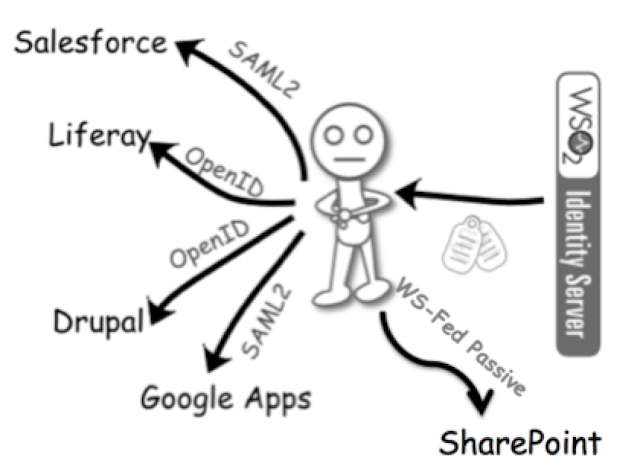

APIs are not just for internal employees. Customers and partners can access public APIs, where we do

not maintain credentials internally. In that case we cannot directly

authenticate them. So, we have to have a federated authentication setup for

APIs, where we would trust a given partner domain, but not individuals. The

SAML

2.0 Bearer Assertion Profile for OAuth 2.0 addresses this concern.

The SAML 2.0 Bearer Assertion Profile, which is built on top of OAuth 2.0 Assertion Profile, defines the use of a SAML 2.0 Bearer Assertion as a way of requesting an OAuth 2.0 access token as well as a way of authenticating the client. Under OAuth 2.0, the way of requesting an access token is known as a grant type. Apart from making the token type decoupled from the core specification it also makes grant type decoupled too. Grant type defines a protocol to get the authorized access token from the resource owner. The OAuth 2.0 core specification defines four grant types - authorization code, implicit, client credentials and resource owner password. But it does not limit to four. A grant type is another way of extending the OAuth 2.0 framework. OAuth 1.0 was coupled to a single grant type, which is almost similar to the authorization code grant type in 2.0.

SAML2 Bearer Assertion Profile defines its own grant type (

urn:ietf:params:oauth:grant-type:saml2-bearer). Using this grant type a client can get either a MAC token or a Bearer token from the OAuth authorization server.

A good use case for SAML2 grant type is a SAML2 Single Sign On (SSO) scenario. A partner employee can login to a web application using SAML2 SSO (we have to trust the partner's SAML2 IdP) and later the web application needs to access a secured API on behalf of the logged in user. To do that the web application can use the SAML2 assertion already provided and exchange that to an OAuth access token via SAML2 grant type. There we need to have an OAuth Authorization Server running inside our domain - which trusts the external SAML2 IdP.

Unlike the four other grant types defined in OAuth 2.0 core specification, SAML2 grant type needs the resource owner to define the allowed scope for a given client out-of-band.

JSON Web Token (JWT) Bearer Profile is almost the same as the SAML2 Assertion Profile. Instead of SAML tokens, this uses JSON Web Tokens. JWT Bearer profile also introduces a new grant type (

urn:ietf:params:oauth:grant-type:jwt-bearer).

This provision for extensibility made OAuth 2.0 very much superior to OAuth 1.0. That does not mean it’s perfect in all means.

To be the de facto standard for API security, OAuth 2.0 needs to operate in a highly distributed manner and still be interoperable. We need to have clear boundaries and well-defined interfaces in between the client, the authorization server and the resource server. OAuth 2.0 specification breaks it into two major flows. The first is the process of getting the access token from the authorization server - which is based on a grant type. The second is the process of using it in a request to the resource server. The way the resource server talks to the authorization server to validate the token is not addressed in the core specification. Hence has lead vendor specific APIs to creep in between the resource server and the authorization server. This kills interoperability. The resource server is coupled with the authorization server and this results in vendor lock-in.

The Internet draft

OAuth Token Introspection which is been discussed under the IETF OAuth working group at the moment defines a method for a client or a protected resource (resource server) to query an OAuth authorization server to determine metadata about an OAuth token. The resource server needs to send the access token and the resource id (which is going to be accessed)- to the authorization server's introspection endpoint. Authorization server can check the validity of the token - evaluate any access control rules around it - and send back the response to the resource server. In addition to the token validity information, it will further return back the scopes, client_id and some other metadata associated with the token.

Apart from having a well-defined interface between the OAuth authorization server and the resource server, a given authorization server should also have the capability to issue tokens of different types. To do this, the client should bring the required token type it needs in the authorization request. But in the OAuth authorization request there is no token type defined. This limits the capability of the authorization server to handle multiple token types simultaneously or it will require a form of out-of-band mechanism to associate token types against clients.

Both the authorization server and the resource server should have the ability to expose their capabilities and requirements through a standard metadata endpoint.

The resource server should be able to expose its metadata by resource, which type of a token a given request expects, the required scope likewise. Also the requirements could change based on the token type. If it is a MAC token, then the resource server needs to declare which signature algorithm it expects. This could be possibly supported via an OAuth extension to the WADL (Web Application Description Language). Similarly, the authorization server also needs to expose its metadata. These could be, the supported token types, grant types likewise.

User-Managed Access (UMA) Profile of OAuth 2.0 introduces a standard endpoint to share metadata at the authorization server level. The authorization server can publish its token end point, supported token types and supported grant types via this UMA authorization server configuration data endpoint as a JSON document.

The UMA profile also mandates a set of UMA specific metadata to be published through this end point. This couples the authorization server to UMA, which also addresses a bigger problem than the need to discover authorization server metadata. It would be more ideal to introduce the need to publish/discover authorization server metadata through an independent OAuth profile and extend that in UMA to address more UMA specific requirements.

The problem addressed by UMA is far beyond, than just exposing Authorization Server metadata. UMA, undoubtedly going to be one of the key ingredients in any ecosystem for API security.

UMA defines how resource owners can control protected-resource access by clients operated by arbitrary requesting parties, where the resources reside on any number of resource servers, and where a centralized authorization server governs access based on resource owner policy. UMA defines two standard interfaces for the Authorization Server. One interface is between the Authorization Server and the Resource Server (protection API), while the other is between Authorization Server and Client (authorization API).

To initiate the UMA flow, the resource owner has to introduce all his resource servers to the centralized authorization server. With this, each resource server will get an access_token from the authorization server - and that can be used by resource servers to access the protection API exposed out by the authorization server.The API consists of an OAuth resource set registration endpoint as defined by OAuth Resource Registration draft specification, an endpoint for registering client-requested permissions, and an OAuth token introspection endpoint.

Client or the Requesting party can be unknown to the resource owner. When it tries to access a resource, the resource server will provide the necessary details - so, the requesting party can talk to the authorization server via Authorization API and get a Requesting Party Token (RPT). This API once again is OAuth protected - so, the requesting party should be known to the authorization server.

Once the client has the RPT - it can present it to the Resource Server and get access to the protected resource. Resource Server uses OAuth introspection endpoint of the Authorization Server to validate the token.

This is a highly distributed, decoupled setup - and further can be extended by incorporating SAML2 grant type.

Token revocation is also an important aspect in API security.

Most of the OAuth authorization servers currently utilize vendor specific APIs. This couples the resource owner to a proprietary API, leading to vendor lock-in. This aspect is not yet being addressed by the OAuth working group. The Token Revocation RFC 7009 addresses a different concern. This proposes an endpoint for OAuth authorization servers, which allows clients to notify the authorization server when a previously obtained refresh or access token is no longer needed.

In most of the cases token revocation by the resource owner will be more prominent than the token revocation by the client as proposed in this draft. The challenge in developing a profile to revoke access tokens / refresh tokens by the resource owner is the lack of token metadata at the resource owner end. The resource owner does not have the visibility to the access token. In that case the resource owner needs to talk to a standard end point at the authorization server to discover the clients it had authorized before. As per the OAuth 2.0 core specification a client is known to the authorization server via the client-id attribute. Passing this back to the resource owner is less meaningful as in most of the cases it’s an arbitrary string. This can be fixed by introducing a new attribute called “friendly-name”.

The model proposed in both OAuth 1.0 as well as in OAuth 2.0 is client initiated. Client is the one who starts the OAuth flow, by first requesting an access token. How about the other way around? Resource owner initiated OAuth delegation. Say for example I am a user of an online photo-sharing site. There can be multiple clients like Facebook applications, Twitter applications registered with it. Now I want to pick some client applications from the list and give them access to my photos under different scopes. Let’s take another example; I am an employee of Foo.com. I'll be going on vacation for two weeks - now I want to delegate some of my access rights to Peter only for that period of time. Conceptually OAuth fits nicely here. But - this is a use case, which is initiated by the Resource Owner - which is not being addressed in the OAuth specification. This would require introducing a new resource owner initiated grant type. The Owner Authorization Grant Type Profile Internet draft for OAuth 2.0 addresses a similar concern by allowing the resource owner to directly authorize a relying party or a client to access a resource.

Delegated access control talks about performing actions on behalf of another user. This is what OAuth addresses. Delegated “chained” access control takes one step beyond this. The OASIS WS-Trust (a speciation built on top of WS-Security for SOAP) specification addressed this concern from its 1.4 version on wards, by introducing the “Act-As” attribute. The resource owner delegates access to the client and the client uses the authorized access token to invoke a service resides in the resource server. This is OAuth so far. In a real enterprise use case it’s a common requirement that the resource or the service, needs to access another service or a set of services to cater a given request. In this scenario the first service going to act as the client to the second service, and also it needs to act on behalf of the original resource owner. Using the access token passed to it as it is - is not the ideal solution. The

Chain Grant Type Internet draft for OAuth 2.0 is an effort to fix this. It defines a method by which an OAuth protected service or a resource, can use a received OAuth token from its client, in turn, to act as a client and access another OAuth protected service. This specification still at its draft-1 would require maturing soon to address these concerns in real enterprise API security scenarios.

The beauty of the extensibility produced by OAuth 2.0 should never be underestimated by any of the above concerns or limitations. OAuth 2.0 is on the right track to become the de facto standard for API security to address enterprise scale security concerns.